Hello everyone. Thank you for visiting my blog. My first post in blogger on this account was 12 years ago. I think it is time to try something new. I am transitioning over to a new blog at https://kevinmarquette.github.io/

This new blog will be based on markdown and sourced out of my github account. I am trying to connect more with community projects and I find myself working a lot out for github. I also post a lot of content at http://www.reddit.com/r/powershell that should translate easier into content onto the new blog.

Only time will tell how long I stick with thew new blog. I am leaving this on live because I have a lot of content that I don't think will export very well. I say that because I use lots of script block and have changed my approach several times over the years. The source on some of them look good online but are very nasty to parse.

Some problems you just can't search on. Here are some I wish were more searchable and this blog is my attempt to make that happen.

Saturday, October 15, 2016

Monday, March 21, 2016

Powershell: Using optional paramaters

Have you ever created a cmdlet that was a wrapper for another command but you added optional parameters. This is a very common pattern. But how do you pass or not pass that optional parameter to the underlying command? How often do you create an if or switch to check for it and have the function call in your code twice? Once with the param and once with out it.

This is such a common pattern especially when dealing with credentials. I saw this example in someone else's code:

if($Credential)

{

foreach($Computer in

$ComputerName)

{

$Disks

+= Get-WmiObject

Win32_LogicalDisk -ComputerName $Computer -Filter "DeviceID='C:'"

-Credential $Credential

-ErrorAction SilentlyContinue

}

}

else

{

foreach($Computer in

$ComputerName)

{

$Disks

+= Get-WmiObject

Win32_LogicalDisk -ComputerName $Computer -Filter "DeviceID='C:'"

}

}

The credential was optional to his use of the cmdlet. I just don't like to see code repeated like that. Sure, he could have placed the if logic inside the foreach loop. But he still has logic in two places. I see the error action is set to SilentlyContinue for one of them. I can't tell if that is intentional or it should have been on both.

This is that same code but using a hashtable and splatting to deal with the optional parameter.

$LogicalDiskArgs = @{

Class

= 'Win32_LogicalDisk'

Filter =

"DeviceID='C:'"

}

if($Credential)

{

$LogicalDiskArgs.Credential

= $Credential

$LogicalDiskArgs.ErrorAction =

'SilentlyContinue'

}

foreach($Computer

in $ComputerName)

{

$Disks

+= Get-WmiObject

@LogicalDiskArgs -ComputerName

$Computer

}

The use of splatting makes this really easy to do. I like how this really shows the intent of using that error action with the optional parameter.

Wednesday, March 16, 2016

Showing the intent of your code in Powershell

I hang out on /r/Powershell quite often and I find myself talking about writing code that shows your intent quite often. When I initially started working with Powershell I was kind of put off by the verbosity of it. Over time I came to accept it and things like tab complete make it easy to work with.

I turned that corner a long time ago and really like the verbosity of it now. When you combine that with clean code best practices that create self documenting code, Powershell becomes so easy to read and work with. I like it when my code makes it very easy to see what the intent of it is. I share a lot of code so this is something I find to be important in my work.

My simple example is trying to split a string and skipping the first item in the list. This is the code sample that was getting passed around:

It worked and accomplished the goal. But compare it to this and think about what one shows the intent of the code better.

In my code, I would take it one more step.

All three of them are valid Powershell and this just a style preference, but having a solid and consistent can make your code a lot easier to read.

I turned that corner a long time ago and really like the verbosity of it now. When you combine that with clean code best practices that create self documenting code, Powershell becomes so easy to read and work with. I like it when my code makes it very easy to see what the intent of it is. I share a lot of code so this is something I find to be important in my work.

My simple example is trying to split a string and skipping the first item in the list. This is the code sample that was getting passed around:

$obj = (Get-ADGroup 'GroupName').DistinguishedName.split(',')

$obj[1..$($obj.count - 1)]

(Get-ADGroup 'GroupName').DistinguishedName -split

',' | Select-Object

-Skip 1

$ADGroup = Get-ADGroup 'GroupName'

$ADGroup.DistinguishedName

-split ','

| Select-Object -Skip 1

Tuesday, January 26, 2016

VM IO stalls on HP DL360 G7 ESXi 5.5.0 2403361 with a HP Smart Array p410 and a spare drive

We have a product that is very sensitive to network issues.

So much so that it logs an error whenever it goes 5 seconds without

communication. Just so happened that we started logging this error after we

moved that product to a HP DL360 G7 running on ESXi 5.5. We first went down the

development route to track down the issue because we were doing load testing on

that product at the time.

At some point, we noticed these errors even when the system

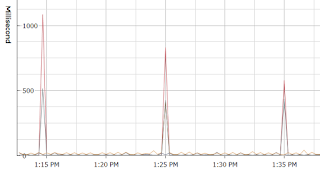

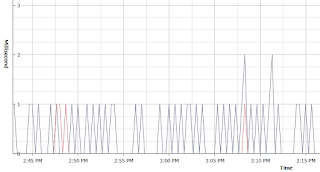

was not under load. We could see these perfectly timed latency spikes at the

same time as we had those errors. Then we shifted our focus to

system/infrastructure.

This is what our datastore latency looked like:

Then we discovered the strangest thing. We had this latency

even when no VMs were running. We configured a second system just like the

first to rule out the hardware. Sure enough, both systems had the problem.

I started juggling drivers. I was quick to load new drivers

and firmware so that felt like a good place to start. I slowly walked the

storage controller driver backwards until the issue went away. I settled on

this driver:

Type: Driver - Storage Controller

Version: 5.5.0.60-1(11 Jun 2014)

Operating System(s): VMware vSphere 5.5

File name: scsi-hpsa-5.5.0.60-1OEM.550.0.0.1331820.x86_64.vib (65 KB)

Version: 5.5.0.60-1(11 Jun 2014)

Operating System(s): VMware vSphere 5.5

File name: scsi-hpsa-5.5.0.60-1OEM.550.0.0.1331820.x86_64.vib (65 KB)

esxcli software

vib install -v file:/tmp/scsi-hpsa-5.5.0.60-1OEM.550.0.0.1331820.x86_64.vib --force

--no-sig-check --maintenance-mode

I didn’t like this as a solution because I had a new storage

controller firmware with a very old storage controller driver. I found another

DL360 G7 that was configured by someone else for another project and it was

running good. They didn’t update anything. Just loaded the ESXi DVD and ran

with it.

I came to the conclusion that it had a valid firmware/driver

combination. I like that setup better than what I had so that became the

recommended deployment. Don’t update the firmware and use the DVD so you don’t

have to mess with drivers.

Then our next deployment that used that configuration and

the issue resurfaced. After ruling out some other variables that could have

impacted the results, I ended up rolling the driver back to fix it.

After pinning the driver down, I went hunting for more

information on it. Searching for latency issue with storage gives you all kinds

of useless results. I was hopeful that searching for the driver would lead me

to some interesting discussions.

I ended up with an answer to my issue in that last link. But

there were a lot of other issues

In summary, HPSA driver later than v60 driving a HP Smart

Array p410 controller of any firmware vintage with a spare drive configured.

The spare drive configuration seems to cause an i/o back-feed into the driver

blowing it up at irregular intervals. This issue will not surface unless a

spare drive is configured on the array controller.

At the time of writing this, the v114 driver also does not

fix the issue.

Friday, January 22, 2016

Powershell: Write-Host vs Write-Output in Functions?

This comes up every so often. This is the question I saw

recently:

I like to have my scripts and functions display a minimal

level of progress on-screen by default, with more detailed progress listed by

using -verbose. Write-Host works fine for the minimal progress display, but I

keep reading horror stories of how the sky will fall if you use Write-Host

instead of Write-Output in Powershell. However ... Write-Output seems to

pollute the pipeline when trying to use the results of a function in a

pipeline.

Is there a best practice for this? Should you use

Write-Verbose for everything, or is Write-Host ok sometimes, or is there some

other common process? - /u/dnisthmnace

One could argue that he has a solid understanding of it and

you is on the right track. You can write a lot of code for yourself this way

and everything would be OK.

The higher level discussion is that use of Write-Host is

often a mental crutch that will slow your advancement of your Powershell

skills. The problem is not so much that you are using it but why you think you

need to be using it. But don't get hung up on that right now.

My advice is to start breaking your scripts into smaller

pieces. Think of them as either tools or controller scripts. Tools are generic

and reusable. The tools should be single purpose (do one thing and do that

thing well). In your tools, use Write-Verbose.

Your controller scripts glue together your tools. Its OK for

them to have a little more polish for the end user. This is where write-host

would belong if you are using it (Purists can still use Write-Output). But the

thing is that this a end of the line script. This is not a script that should

be consumed by other scripts.

Personally, I use write-verbose for everything that I don't

want on the pipe (even in my controller scripts). I do this so I can choose

when I see the output and when I don't. When you start reusing code, this

becomes more and more important. Before you know it, you have functions calling

functions calling functions or functions executing with hundreds of data

points. I can even set verbose on a function call but let that function

suppress noisy sub functions.

I use Write-Verbose in my controller scripts because they

can easily turn into tools and I don't have to go back and change the output.

I hope that sheds some light on the discussion. It's not the

end of the world to use Write-Host, just know that you don't need to use it.

Once you understand that, you will start writing better Powershell.

Thursday, January 21, 2016

Setting default parameters for cmdlets without changing them with $PSDefaultParameterValues

I ran across something again recently that I find to be really

cool. You can add a command to your profile to add default parameters to any

cmdlet. I first saw this as a way to have Format-Table execute with the –AutoSize

flag every time.

$PSDefaultParameterValues.Add('format-table:autosize',$True)

That is a cool idea if you are stuck on Powershell 4 or

older. Now that Powershell 5 kind of does that already, I really didn’t think

much about it. Sometime later, I found myself wanting to share a cmdlet with

someone and the default parameter values were specific to the way I used it. I

didn’t really like that so I changed it. I made it into a better tool.

Except now I was supplying those values over and over every

time I used it for myself. I decided there had to be a better way and that’s when

I finally remembered this trick. And it can work on any cmdlet.

$PSDefaultParameterValues.Add('Unlock-ADAccount:Server','domain.com')

So now I can keep my cmdlets more generic and still get the benefit

of default parameters that benefit myself. I can stick in common server names or credentials. Any value that I can think of really. Just add it to my profile and I am all set.

Wednesday, January 20, 2016

Powershell Error: Cannot process argument because the value of argument "value" is not valid. Change the value of the "value" argument and run the operation again.

This Powershell error Cannot process argument because the value of argument "value" is not valid. Change the value of the "value" argument and run the operation again. has tripped me up a few times now. I

can usually fix it without ever really knowing how. I hate those type of

solutions so this time I decided to get to the bottom of it.

I started using a new template snippet for my advanced

functions. I tracked it down to one small detail in that template that was

causing this issue. Here is the code below:

function New-Function

{

<#

.SYNOPSIS

.EXAMPLE

New-Function -ComputerName server

.EXAMPLE

.NOTES

#>

[cmdletbinding()]

param(

#

Pipeline variable

[Parameter(

Mandatory = $true,

HelpMessage = '',

Position =

0,

ValueFromPipeline = $true,

ValueFromPipelineByPropertyName = $true

)]

[Alias('Server')]

[string[]]$ComputerName

)

process

{

foreach($node in $ComputerName)

{

Write-Verbose

$node

}

}

}

I start with this and fill in the rest of my code as I go.

The full error message is this:

Cannot process argument because the value of argument

"value" is not valid. Change the value of the "value"

argument and run the operation again.

At line:16 char:11

+ [Parameter(

+ ~~~~~~~~~~~

+ CategoryInfo : InvalidOperation: (:) [],

RuntimeException

+

FullyQualifiedErrorId : PropertyAssignmentException

The function also acts kind of strange. I can tab complete

the name but not any arguments. This tells me it is not loading correctly. If I

try and get-help on the command, I get the exact same error message.

It took me a little trial and error but I narrowed it down

to one of the parameter attributes. In this case, if the HelpMessage was left '' then it would error out. I would usually remove this line or add a real message

eventually.

#

Pipeline variable

[Parameter(

Mandatory = $true,

HelpMessage = '',

Position =

0,

ValueFromPipeline = $true,

ValueFromPipelineByPropertyName = $true

)]

[Alias('Server')]

[string[]]$ComputerName

So if you are getting this error message, pay attention to

the actual values in the parameter. If any of them are blank or null, you may

run into this.

In the end, I updated my snippet to use a different placeholder.

Problem solved.

Subscribe to:

Posts (Atom)