We have a product that is very sensitive to network issues.

So much so that it logs an error whenever it goes 5 seconds without

communication. Just so happened that we started logging this error after we

moved that product to a HP DL360 G7 running on ESXi 5.5. We first went down the

development route to track down the issue because we were doing load testing on

that product at the time.

At some point, we noticed these errors even when the system

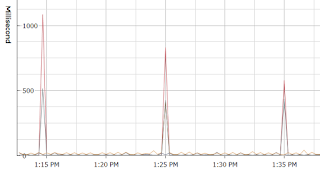

was not under load. We could see these perfectly timed latency spikes at the

same time as we had those errors. Then we shifted our focus to

system/infrastructure.

This is what our datastore latency looked like:

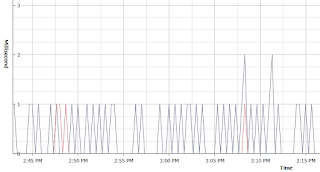

Then we discovered the strangest thing. We had this latency

even when no VMs were running. We configured a second system just like the

first to rule out the hardware. Sure enough, both systems had the problem.

I started juggling drivers. I was quick to load new drivers

and firmware so that felt like a good place to start. I slowly walked the

storage controller driver backwards until the issue went away. I settled on

this driver:

Type: Driver - Storage Controller

Version: 5.5.0.60-1(11 Jun 2014)

Operating System(s): VMware vSphere 5.5

File name: scsi-hpsa-5.5.0.60-1OEM.550.0.0.1331820.x86_64.vib (65 KB)

Version: 5.5.0.60-1(11 Jun 2014)

Operating System(s): VMware vSphere 5.5

File name: scsi-hpsa-5.5.0.60-1OEM.550.0.0.1331820.x86_64.vib (65 KB)

esxcli software

vib install -v file:/tmp/scsi-hpsa-5.5.0.60-1OEM.550.0.0.1331820.x86_64.vib --force

--no-sig-check --maintenance-mode

I didn’t like this as a solution because I had a new storage

controller firmware with a very old storage controller driver. I found another

DL360 G7 that was configured by someone else for another project and it was

running good. They didn’t update anything. Just loaded the ESXi DVD and ran

with it.

I came to the conclusion that it had a valid firmware/driver

combination. I like that setup better than what I had so that became the

recommended deployment. Don’t update the firmware and use the DVD so you don’t

have to mess with drivers.

Then our next deployment that used that configuration and

the issue resurfaced. After ruling out some other variables that could have

impacted the results, I ended up rolling the driver back to fix it.

After pinning the driver down, I went hunting for more

information on it. Searching for latency issue with storage gives you all kinds

of useless results. I was hopeful that searching for the driver would lead me

to some interesting discussions.

I ended up with an answer to my issue in that last link. But

there were a lot of other issues

In summary, HPSA driver later than v60 driving a HP Smart

Array p410 controller of any firmware vintage with a spare drive configured.

The spare drive configuration seems to cause an i/o back-feed into the driver

blowing it up at irregular intervals. This issue will not surface unless a

spare drive is configured on the array controller.

At the time of writing this, the v114 driver also does not

fix the issue.